How can a constrained, non-symbolic musical interface preserve authorship for users whose musical intent is still forming?

Goal: Investigate whether embedding harmonic structure directly into an instrument's control logic can support exploratory music-making without requiring symbolic music-theoretic knowledge.

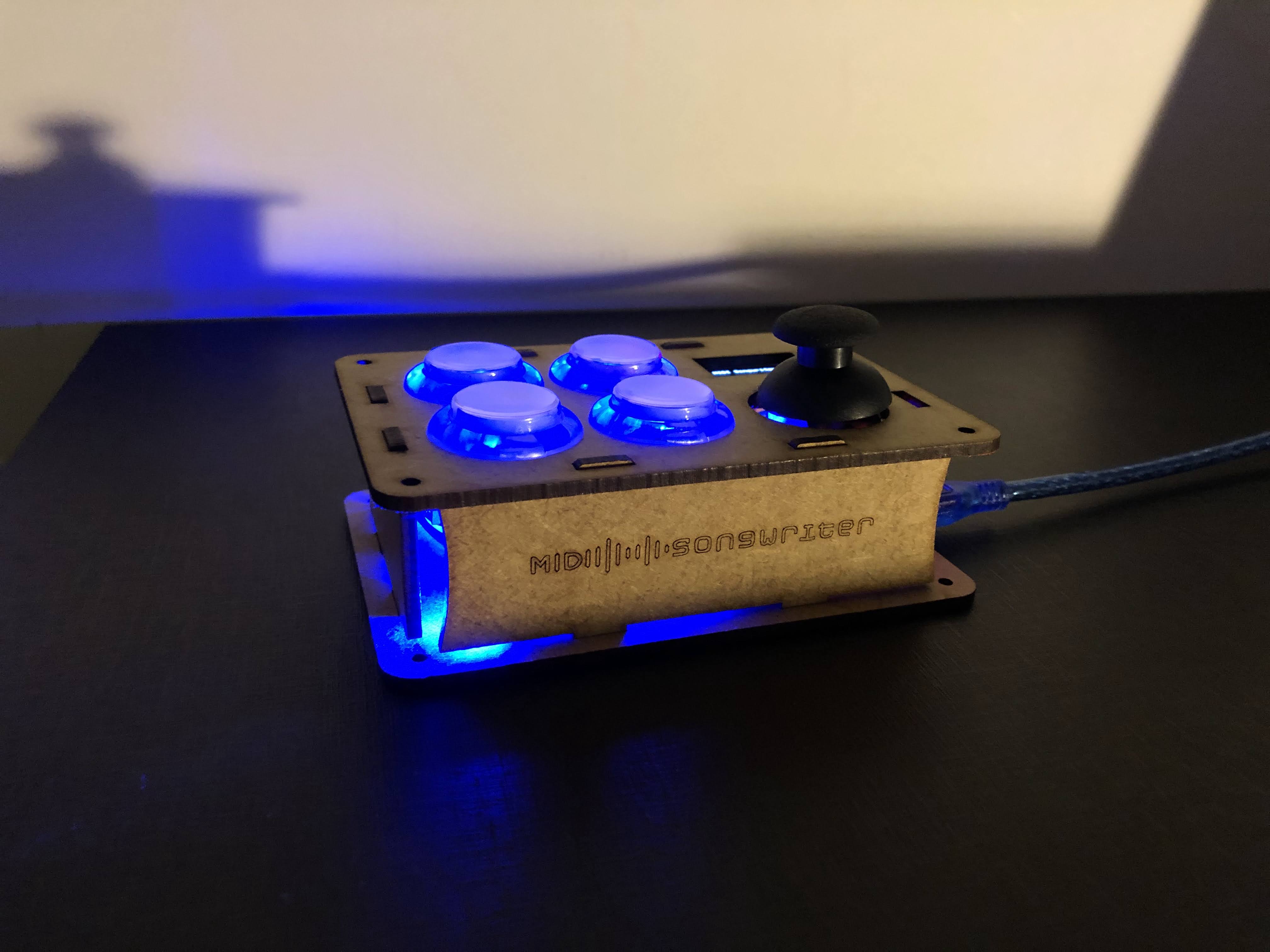

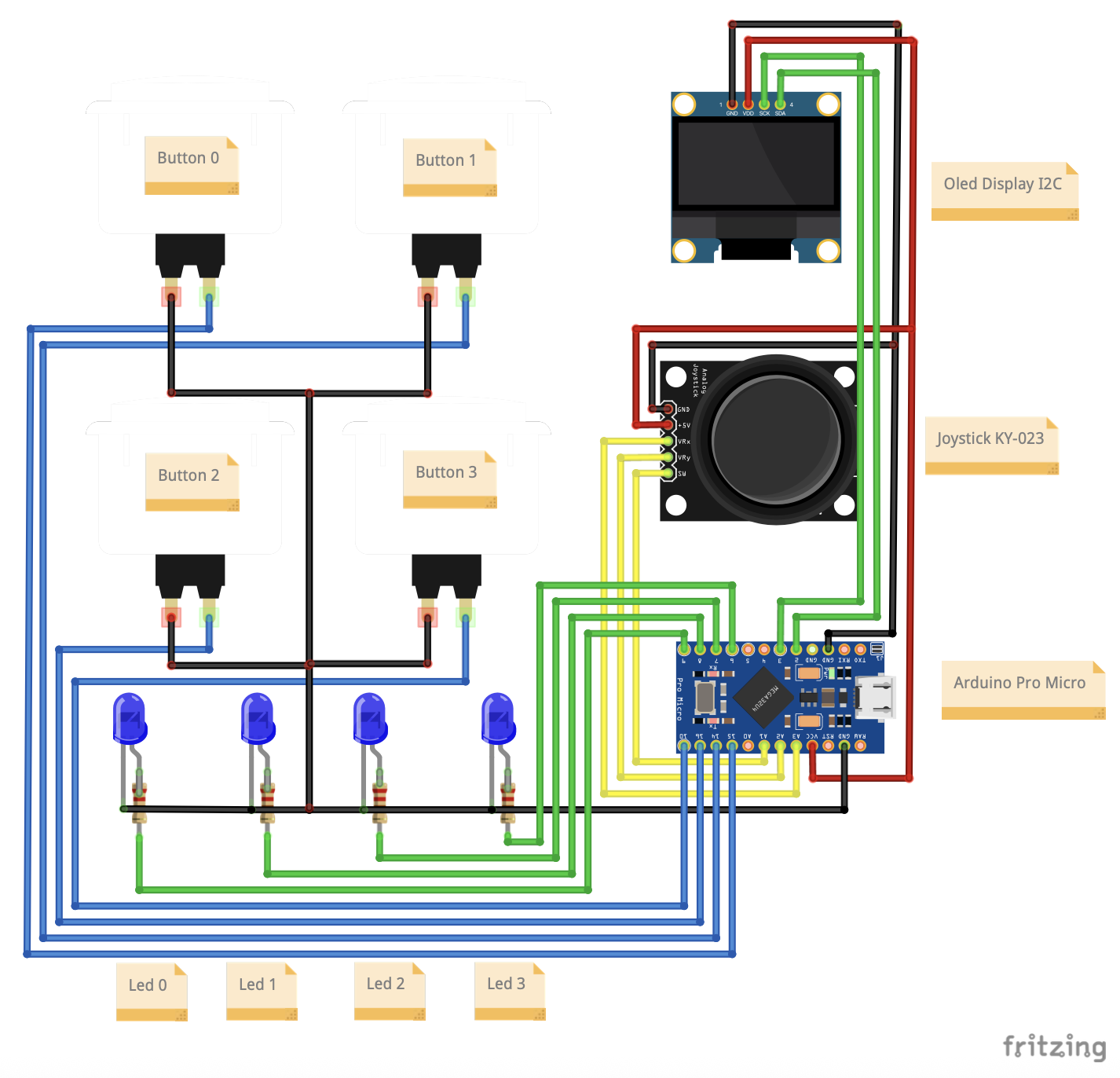

How it was built: MIDI-Songwriter is a microcontroller-based hardware instrument that outputs MIDI-over-USB to external sound engines.

Interaction is driven by discrete physical buttons and visual feedback via RGB LEDs. Internally, the system is organized into distinct harmonic

“pages,” each corresponding to a stylistic harmonic vocabulary (e.g., pop, blues, rock). Each page defines a bounded harmonic state space,

restricting chord choices to a stylistically coherent grammar.

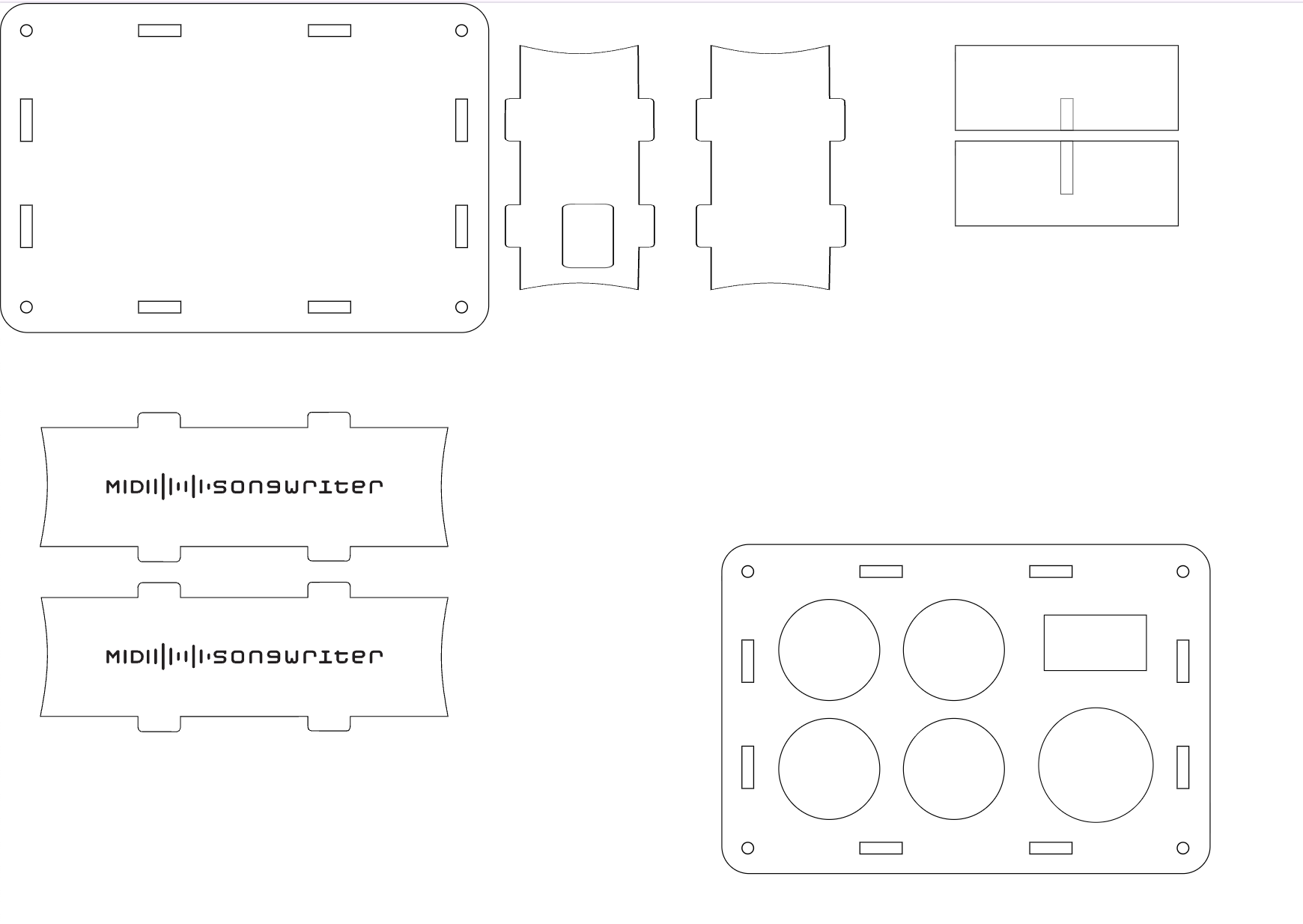

Implementation: Designed and fabricated a custom enclosure and tactile interface, and implemented embedded firmware in C++ on Arduino. The firmware manages real-time input processing, harmonic state transitions, MIDI event generation, looping functionality, and LED feedback. By encoding harmonic constraints directly into the system's state logic, the instrument ensures immediate musical coherence without exposing chord labels or theoretical abstractions to the user.

Challenge: The central challenge was balancing expressive freedom with harmonic constraint. Over-restricting the system risked reducing the user's sense of authorship, while under-restricting it led to harmonically incoherent output that increased cognitive load for novice users. Designing interaction that preserved agency while preventing musically invalid states required careful structuring of harmonic vocabularies and state transitions.

Improvement: Future iterations could investigate how users form internal mental models of constrained harmonic systems over time. Varying the visibility or flexibility of harmonic structure, or introducing adaptive constraint boundaries, could further clarify how different levels of constraint affect perceived authorship, learning, and long-term creative engagement.